Baby cries, simplified.

With the help of artificial intelligence, the ChatterBaby™ algorithm can predict why your baby is crying with over 90% accuracy.

Science

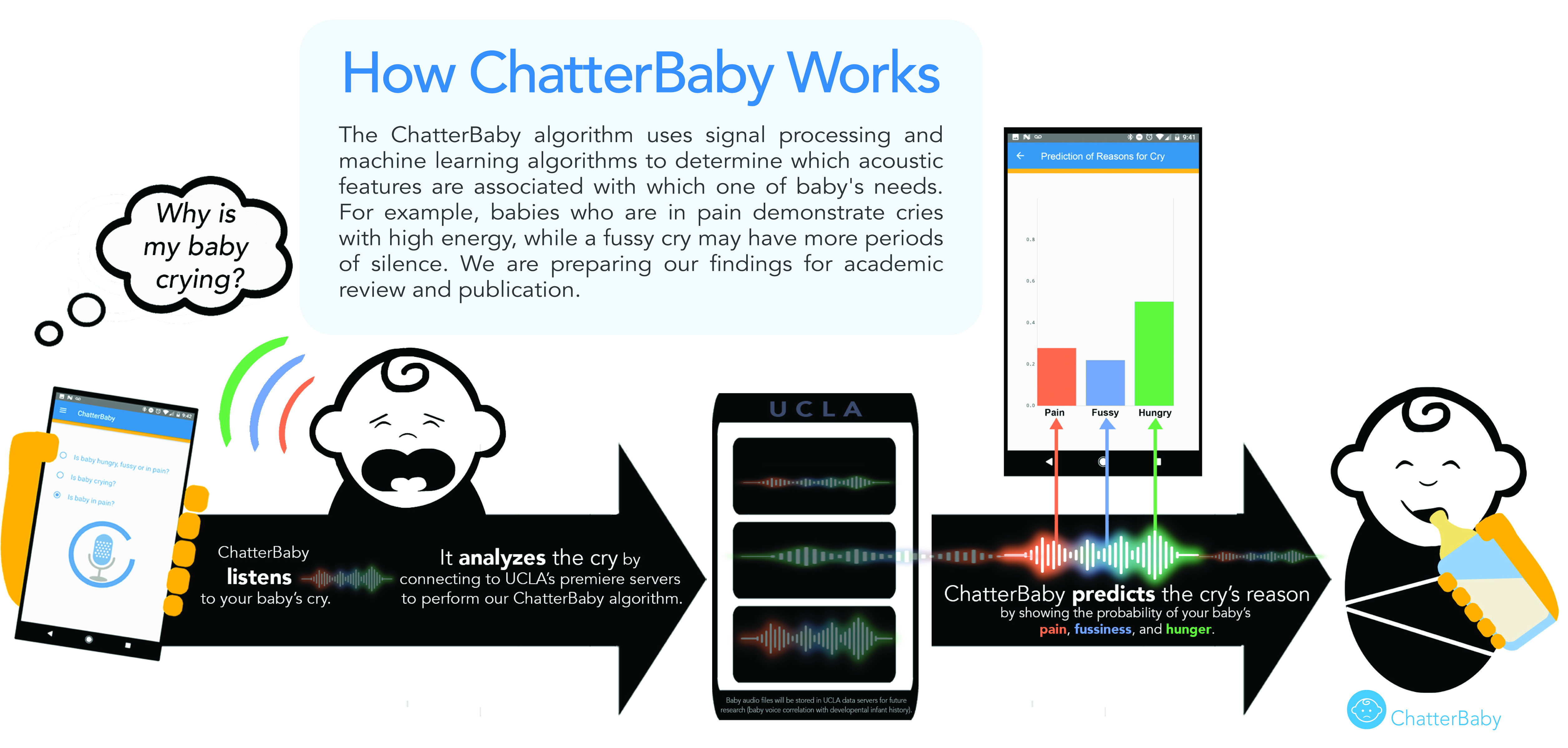

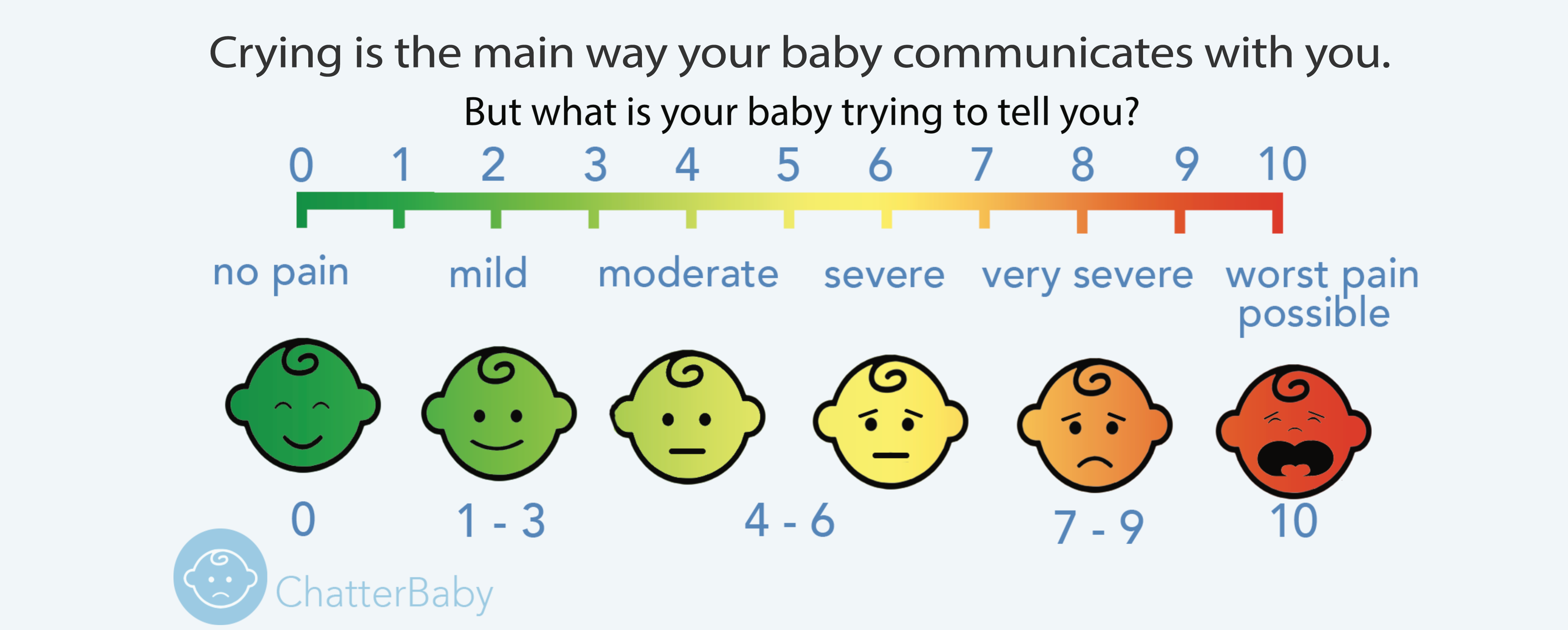

Accurate assessment of pain in babies is uniquely challenging and critically important. The human ear can interpret many sounds, but ChatterBaby™ can differentiate specific characteristics via our ChatterBaby™ algorithm on UCLA servers. Through machine learning, ChatterBaby™ is able to adapt and learn how better and more accurately identify your baby's cries by comparing it to the cries in our database.

How did we get our cry database? First, painful cries were recorded from babies who were receiving vaccines or ear piercings. Other cries were also collected (fussy, hungry, separation anxiety, colic, scared), and were meticulously labeled by a panel of veteran mothers. Only the cries whose labels were universally agreed upon by our veteran moms were used to teach our algorithm, to make sure that our algorithm would have the same ear as the "gold standard."

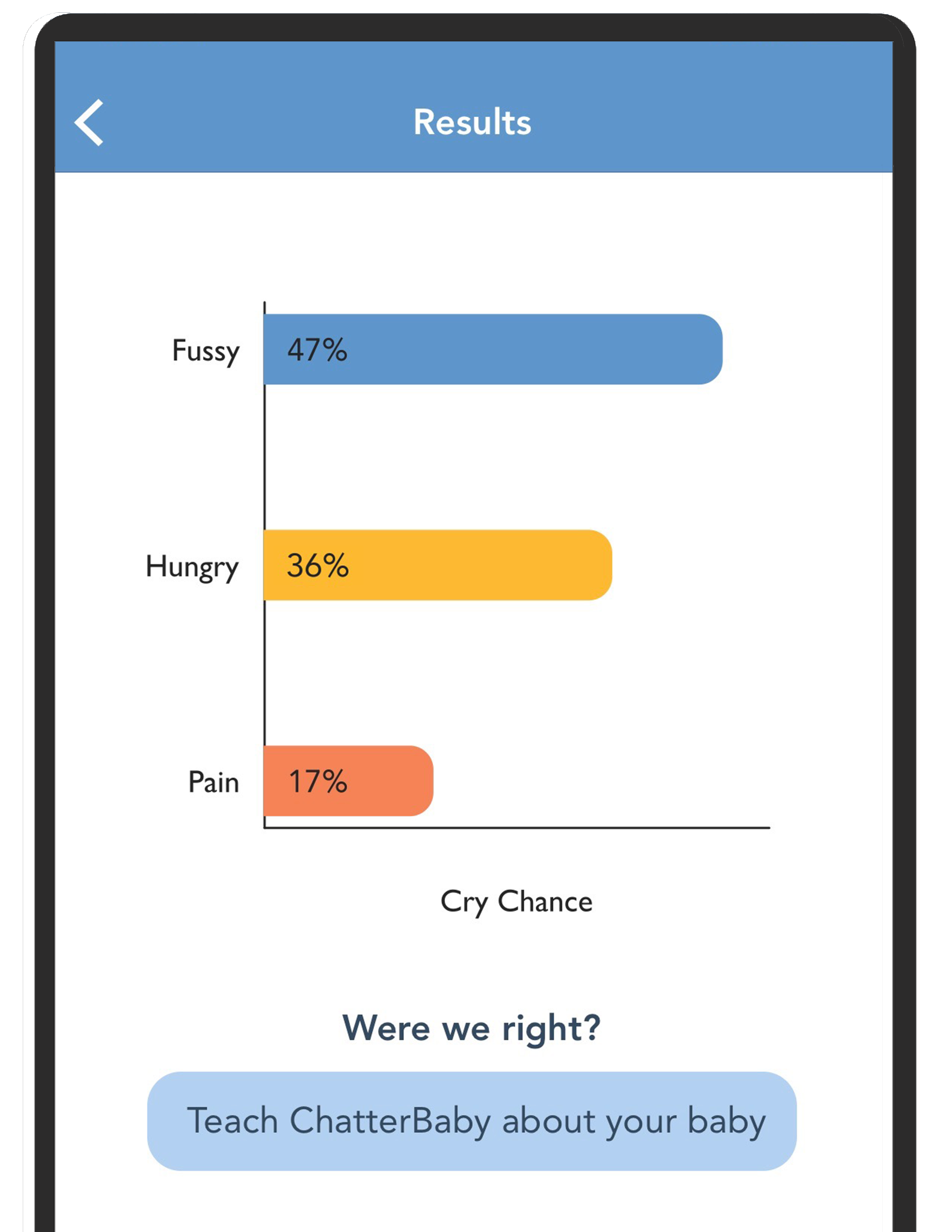

Our current models are predicting only three states (hunger, pain, fussy) because these states are not developmentally dependent, and have consistent acoustic patterns for newborns and older babies.

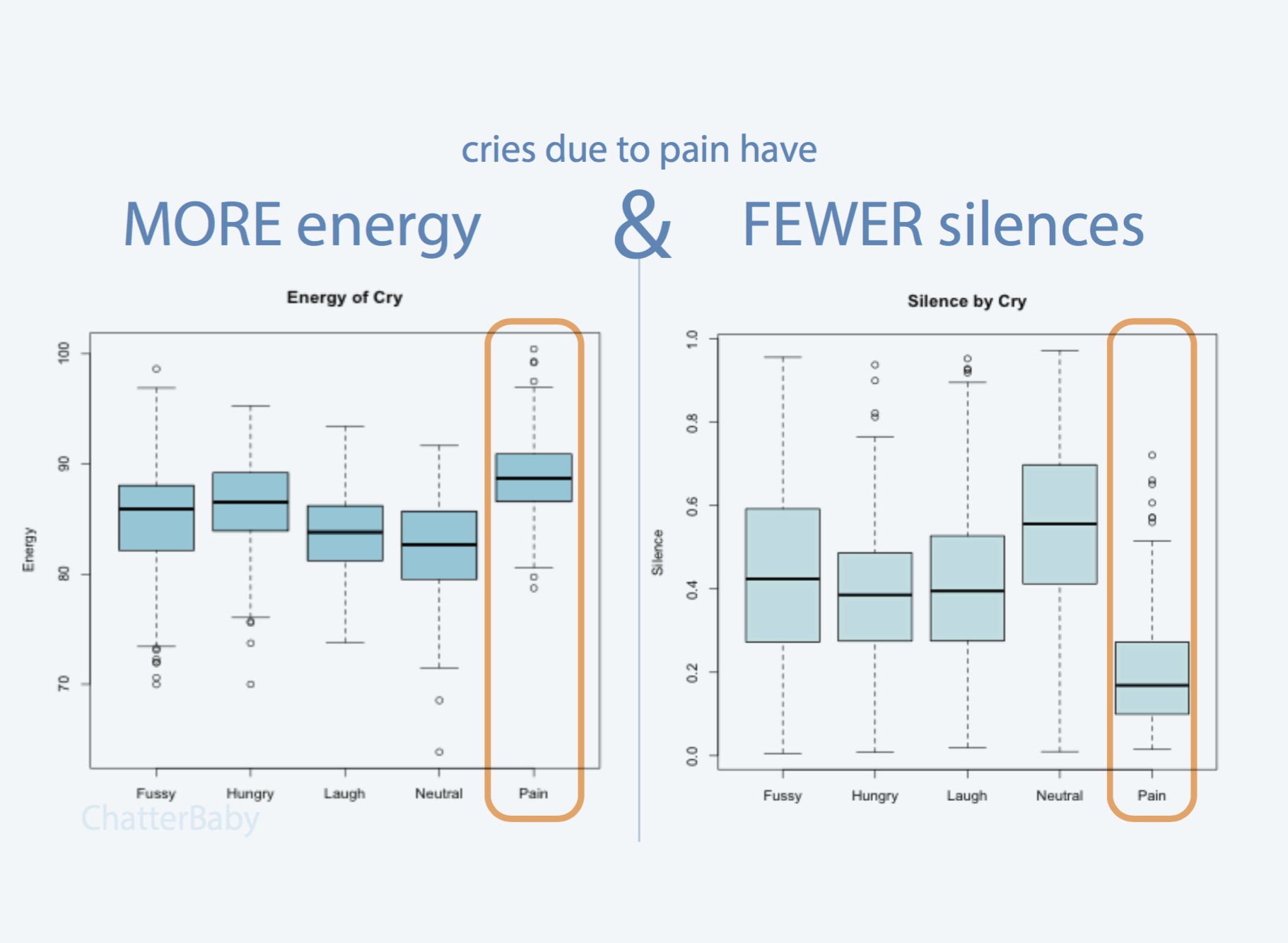

A 6-week old baby is unlikely to have separation anxiety, but he will be hungry - sometimes every 45 minutes when cluster feeding. The acoustic characteristics we use to predict which type of cry is occurring include the strength, duration, and amount of silence within a cry (see graphs!), among thousands of other features which our algorithms compute. By taking these characteristics into account, ChatterBaby™ analyzes and predicts the reason for the cry in an accurate manner – in fact, ChatterBaby has identified cries due to pain over 90% of the time via recordings of babies getting vaccinated.

Your baby's voice is unique. We regularly update and revise our algorithms with new data, which means we will retune our algorithm to your baby if you send us your baby's data. If you would like our future algorithms to be better tuned to your baby, please send us labeled a video or audio sample of your baby crying, babbling, or laughing, and be sure to include his/her age in months through our Donation Form. Why is your baby crying? If you "feed" ChatterBaby your data, our algorithm will "learn" your baby's unique acoustic features and improve its prediction the next time it hears your baby. Before you send us data, please refer to the Consent Form and our Privacy Policy.

Data

When you record your baby's cry via the ChatterBaby™ app, the app sends the cry to our servers to remove as much background noise as possible to focus on analyzing the cry. A majority of the time, the researchers don't listen to the cries- only our machines do!

The servers run the cry through our ChatterBaby™ algorithm and save it for future and further research.

We remove as much personal information as possible from audio files to better focus on the audio of the baby, and our algorithms- not humans- analyze the cries! See our FAQ page for more information.

Research

ChatterBaby™ is a project at UCLA inspired by Dr. Ariana Anderson, mother of four and professor at the UCLA Semel Institute for Neuroscience and Human Behavior.

Born at the UCLA Code for the Mission, ChatterBaby™ was originally designed to substitute infant sound with labeled states, initially targeted to help Deaf parents understand their baby's cries so that they could act appropriately to their infants' and toddlers' vocal cues.

The app is now aimed at helping parents better understand their babies and in turn, to help researchers at UCLA understand how cries affect the babies themselves. Through the survey and cry recordings that parents provide about their babies in the ChatterBaby™ app, researchers at UCLA are using these data to conduct further research in regards to how a baby's cries can affect their likelihood for development and likelihood for autism.

For more information about our researchers, meet the team! To find out more about the ChatterBaby™ app, see our FAQ.